|

How to play video files through DirectShow |

|

|

How to play video files through DirectShow |

|

DirectShow is a multimedia framework and API produced by Microsoft for software developers to perform various operations with media files or streams. Based on the Microsoft Windows Component Object Model (COM) framework, DirectShow provides a common interface for media across many programming languages, and is an extensible, filter-based framework that can render or record media files on demand.

IMPORTANT NOTE ABOUT DIRECTSHOW PERFORMANCES: Being based upon DirectX, DirectShow will perform better in presence of modern video cards supporting Direct3D and hardware acceleration. If the video card should not support these features, you may experience a huge CPU load during video rendering. |

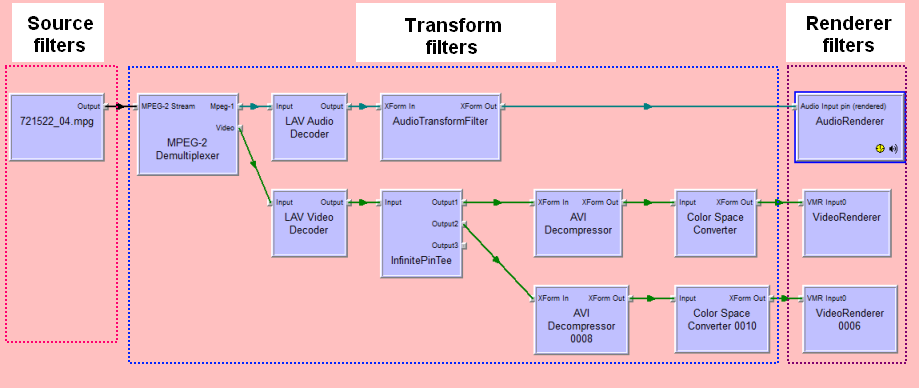

DirectShow divides a complex multimedia task like video playback into a sequence of fundamental processing steps known as "filters". Each filter, which represents one stage in the processing of the data, has input and/or output pins that may be used to connect the filter to other filters. The generic nature of this connection mechanism enables filters to be connected in various ways so as to implement different complex functions. To implement a specific complex task, a developer must first build a "filter graph" by creating instances of the required filters, and then connecting the filters together.

There are three main types of filters:

Source filters: These provide the source streams of data. For example, reading raw bytes from any media file.

Transform filters: These transform data that is provided from other filter's output. For example, doing a transform such as adding text on top of video or uncompressing an MPEG frame.

Renderer filters: These render the data. For example, sending audio to the sound card, drawing video on the screen or writing data to a file.

In the above example of filter graph, generated by the GraphEdt.exe application provided by Microsoft, from left to right, we can find a source filter to read an MP4 file and to split video and audio streams, decoder filters to parse and decode separated video and audio streams and, finally, rendering filters to play raw video and audio samples. Each filter has one or more pins that can be used to connect that filter to other filters. Every pin functions either as an output or input source for data to flow from one filter to another. Depending on the filter, data is either "pulled" from an input pin or "pushed" to an output pin in order to transfer data between filters. Each pin can only connect to one other pin and they have to agree on what kind of data they are sending.

By default, DirectShow includes a limited number of filters for decoding some common media file formats such as MPEG-1, MP3, Windows Media Audio, Windows Media Video, MIDI, media containers such as AVI, ASF, WAV, some splitters/demultiplexers, multiplexers, source and sink filters and some static image filters. However, DirectShow's standard format repertoire can be easily expanded by means of a variety of commercial and open source filters. Such filters enable DirectShow to support virtually any container format and any audio or video codec. One of the best packages containing most audio and video codecs for DirectShow is K-Lite Codec Pack, available in both x86 and x64 versions, and can be downloaded for free from the following link.

IMPORTANT NOTE ABOUT X86 AND X64 VERSIONS OF WINDOWS: When the container application is compiled for "Any CPU" or for "x64" and run on a x64 versions of Windows, in order to create the filters graph DirectShow automatically searches for x64 versions of the codecs; K-Lite Codec Pack comes with both x86 and x64 versions of its binaries so, when running on a x64 version of Windows, it's very important being sure that the x64 version is installed. |

IMPORTANT NOTE ABOUT BUGGY VIDEO DRIVERS AND BLANK SCREENS: When dealing with Windows 10 and higher versions, the default video renderer is VMR7 which, due to the use of DirectDraw for its rendering, is quite efficient in terms of CPU usage and speed: unfortunately it has been found that many video drivers are unable to manage its video flow properly and this could result in a blank video window that could be totally white or totally black: when this situation happens it would be suggested to verify the availability of an updated driver with the video card manufacturer; if this shouldn't be available, the suggestion would be to disable the usage of the VMR7 video renderer through the VideoPlayer.DisableVMR7 method. |

Audio DJ Studio, through the embedded VideoPlayer class and the related DisplayVideoPlayer property, simplifies developers life by leveraging DirectShow technology in order to render video streams to a graphic surface and to manage contents of audio streams separately, allowing the possibility to apply most of audio features (special effects, output redirection, visual feedbacks, etc.) available for regular audio files.

IMPORTANT NOTE ABOUT CODECS AND FILTERS: By default, DirectShow includes a limited number of filters for decoding some common media file formats such as MPEG-1, MP3, Windows Media Audio, Windows Media Video, MIDI, media containers such as AVI, ASF, WAV, some splitters/demultiplexers, multiplexers, source and sink filters and some static image filters. However, DirectShow's standard format repertoire can be easily expanded by means of a variety of commercial and open source third-party filters. Such filters enable DirectShow to support virtually any container format and any audio or video codec. This obviously means that, when Audio DJ Studio loads a video clip, most of the involved binary code is embedded inside external and third-party components: as you may understand, MultiMedia Soft cannot guarantee that filters provided by Microsoft or by third-party companies will always behave as expected: they could contain bugs or memory leaks and, in these cases, there is no workaround that MultiMedia Soft could apply in order to avoid this kind of problems; inside Appendix B you will find a few guidelines that could be of help in order to manage DirectShow configuration through K-Lite provided tools. |

Before loading a video clip into a given player, we must decide how we will manage the output of the video stream and the output of the audio stream:

| • | Video stream output management: The video stream of video clips is rendered over a graphical surface, named "output video window", provided by an existing window, form or dialog box: you can create, position and size this output video window using the VideoPlayer.VideoWindowAdd method then you can move it through the VideoPlayer.VideoWindowMove method and hide/show it through the VideoPlayer.VideoWindowShow method. After having added the output window, you can change it through the VideoPlayer.VideoWindowChangeTarget method. |

A couple of important notes:

| • | output video windows added when a video clip is already loaded into a player will be instanced only after loading a new video clip |

| • | you can create more than one of these video windows through further calls to the VideoPlayer.VideoWindowAdd method: in this case the video stream will be reproduced in parallel and in perfect sync on all of the instanced video windows. |

| • | if you simply need to playback the audio stream of the video clip, you don't need to create an output video window: if no output video window is found at playback time, the component will remove the video stream from the filter graph, allowing to save some CPU time |

| • | if you simply want to render the video stream inside a floating window, the component can redirect the video stream to the "Active Movie" window provided by DirectShow: in this case you simply need to make a call to the VideoPlayer.VideoStreamSendToActiveMovieWin method without making any previous call to the VideoPlayer.VideoWindowAdd method. |

| • | if you want to render the video clip in full screen mode, refer to the How to render video clips in full screen tutorial. |

As you may know, Audio DJ Studio gives the possibility to play more media files at the same time through the use of different players instanced by the InitSoundSystem method: in case you should have the need to mix/blend the video output stream of two video clips inside the same output window, with full control over alpha channel transparency and positioning, refer to the How to use the video mixer tutorial.

| • | Audio stream output management: DirectShow sends the audio stream to the system default DirectSound device as seen inside the filter graph above by instancing the "Default DirectSound Device" audio renderer filter; Audio DJ Studio replaces the default audio renderer filter with its own renderer filter allowing a better control over audio stream playback; there are three main modes for audio management that can be set through the VideoPlayer.AudioRendererModeSet method: |

| • | Custom audio management: this is the default one and allows applying most of audio features (special effects, output redirection, visual feedbacks, support for ASIO devices, etc.) available for regular audio files by using exactly same methods like StreamVolumeLevelSet, StreamOutputDeviceSet, etc.; the unique drawback of this feature is that it will cost some more CPU (around 5-10%) in order to be achieved. |

| • | DirectSound standard management: respect to the default DirectShow implementation, this management allows redirecting the audio output to a specific DirectSound device through the VideoPlayer.AudioRendererDsDeviceSet method and to control volume and balance of the audio stream. Available DirectSound output devices registered as DirectShow audio renderers can be enumerated through the VideoPlayer.AudioRendererDsDeviceGetCount and VideoPlayer.AudioRendererDsDeviceGetDesc methods. |

| • | Null rendering: in cases where only the video stream is needed, allows removing the audio stream from the filter graph and to save some CPU time. |

IMPORTANT NOTE ABOUT AUDIO AND VIDEO SYNC: As mentioned above, in order to allow applying special effects to the audio stream of video clips, Audio DJ Studio implements its own audio renderer which is different respect to the one used by other players like "Windows Media Player™": by default the audio stream has a 200 milliseconds delay respect to the video stream because this works better when dealing with the majority of audio drivers and is not much noticeable on modern PCs having LCD monitors instead of CRT monitors; in case this should be noticeable on your side, you could use the VideoPlayer.AudioRendererDelaySet method in order to remove/reduce this delay: this method accepts delays in the range from -200 to +500 (expressed in milliseconds); in case the video stream should be rendered in advance respect to the audio stream, a negative delay (for example -150) could remove the gap.

With certain "poor quality" sound card drivers the usage of a negative delay could cause sound stuttering so, as a general rule, due to the fact that depending upon the target machine the audio delay may have different behaviour, it's always suggested providing a setting, driven through a slider control, that would allow fine-tuning this delay on customers machines.

As a further note, when the InitDriversType method has been called with a parameter different from the default DRIVER_TYPE_DIRECT_SOUND, when dealing specifically with DirectSound devices a further improvement could be obtained by reducing the size of the DirectSound buffer through the BufferLength property from the default 500 ms to a value around 170 ms: this would reduce latency caused by the filling of DirectSound buffers and could impact on the audio delay.

As a final note, in case suggestions above shouldn't be enough, the best way to have the audio always in sync would be avoiding the usage of the default audio renderer of Audio DJ Studio but using the default DirectSound audio renderer: as seen inside the VideoPlayerSimple sample, installed with our setup package, the audio renderer can be changed through the VideoPlayer.AudioRendererModeSet method by setting the value of the nMode parameter to AUDIO_RENDERER_MODE_CUSTOM_2 or to AUDIO_RENDERER_MODE_DS_STANDARD. In this cases, depending upon the chosen mode, you would obviously loose some of the audio related features. |

A video clip can be loaded into an instanced player using the VideoPlayer.Load, VideoPlayer.LoadForTempoChange or VideoPlayer.LoadForEAX methods; depending upon the codec and the duration of the video clip to load, due to the fact that the loading is performed inside the main application's thread, the call to this method could cause a temporary freeze of the container application user interface: in order to avoid this issue, the use of the VideoPlayer.LoadSync, VideoPlayer.LoadSyncForTempoChange or VideoPlayer.LoadSyncForEAX methods should be preferred; when using "Sync" methods, it's recommended triggering the SoundSyncLoaded event before making further calls for starting playback or for obtaining information about the loaded video.

After loading the video clip, you can start its playback using the VideoPlayer.Play method. As for audio streams, you have various methods for controlling playback like VideoPlayer.Pause, VideoPlayer.Resume and VideoPlayer.Stop.

With certain codecs, and only if VideoPlayer.IsSeekable returns "true", there is the possibility to change the current playback position through the VideoPlayer.Seek or VideoPlayer.SeekToFrame methods; you can also forward and rewind the playback position of a predefined amount through the VideoPlayer.Forward and VideoPlayer.Rewind methods and, when a video clip is paused or stopped, you can instruct the player to step by single frames back and forth through the VideoPlayer.SeekToPreviousFrame and VideoPlayer.SeekToNextFrame methods.

Once a video clip has been loaded, you can obtain a number of information about it:

| • | Duration through the VideoPlayer.GetDuration method |

| • | Native aspect ratio through the VideoPlayer.GetAspectRatio method. |

| • | Native dimensions in pixels through the VideoPlayer.GetNativeSize method; due to the fact that ratio of native dimensions may vary depending upon the video ratio (for example it could be 4:3 or 16:9), the control gives the possibility to keep original aspect ratio during video stream rendering through the VideoPlayer.VideoStreamKeepAspectRatio method. |

| • | Availability of the video stream through the VideoPlayer.IsVideoStreamAvailable method. |

| • | Availability of the audio stream through the VideoPlayer.IsAudioStreamAvailable method. |

| • | Total number of video frames through the VideoPlayer.FramesNumberGet method. |

| • | Average video frames rate through the VideoPlayer.FramesAverageRateGet method. |

| • | Codec used to decode the video stream through the VideoPlayer.CodecVideoDescGet method. |

| • | Codec used to decode the audio stream through the VideoPlayer.CodecAudioDescGet method. |

| • | During playback, the current position through the VideoPlayer.GetPosition method. |

For video formats supporting multiple audio streams, you can know if the loaded video clip has more than one audio stream inside using the VideoPlayer.AudioMultiStreamCheck method and enumerate available audio streams through the combinations of the VideoPlayer.AudioMultiStreamGetCount and VideoPlayer.AudioMultiStreamGetName method. The current audio stream can be changed using the VideoPlayer.AudioMultiStreamSelect method.

Audio DJ Studio gives the possibility to grab single video frames and store them into a graphical output file (in GIF, PNG, JPEG, BMP and TIFF format) or into a memory bitmap: refer to the How to grab frames from video files tutorial for details.

Audio DJ Studio gives also the possibility to extract the audio stream, in WAV PCM format, into a memory buffer or into a temporary file through the VideoPlayer.AudioTrackExtract method; the availability of the extracted audio stream, allows performing the following further operations:

| • | Sound waveform analysis: see the How to obtain the sound's waveform tutorial for details |

| • | Detection of initial and final silence positions: through the SilenceDetectionOnPlayerRequest or the SilenceDetectionOnPlayer methods. |

| • | Detection of beats per minute (BPM): through the RequestSoundBPM method. |

| • | Direct access to the extracted audio stream through the VideoPlayer.AudioTrackGetMemoryPtr and VideoPlayer.AudioTrackGetMemorySize methods, when the audio stream is extracted into a memory buffer, or through the VideoPlayer.AudioTrackGetTempFilePathname and VideoPlayer.AudioTrackGetTempFileSize methods, when the audio stream is extracted into a temporary file. |

When the video clip is no more needed, you can discard it from memory calling the VideoPlayer.Close method. As a side note, calling this method is not needed when loading a new video clip because it will be called internally by the control.

NOTE ABOUT TEMPO AND PLAYBACK RATE CHANGE: It's important to note that not all of the video codecs are able to manage playback rate change correctly: for example certain kind of AVI codecs will loose synchronism between audio and video when calling the Effects.PlaybackTempSet or the Effects.PlaybackRateSet methods: this is not a limitation of our control but a defect of the codec when running the video at a speed different than normal. Furthermore, other video codecs are not fast enough in order to provide enough PCM sound data for tempo and/or playback rate change so you could experience "stuttering" during playback: when dealing with codecs that cause stuttering during playback it would be a better solution avoiding the use of the VideoPlayer.LoadForTempoChange method.

With regards to the filters graph created after loading the video clip, you have the possibility to enumerate all of the available filters through the VideoPlayer.FiltersInGraphGetCount and VideoPlayer.FiltersInGraphGetName methods and determine, for each of them, the class identifier through the VideoPlayer.FiltersInGraphGetClsid method and the availability of a property page through the VideoPlayer.FiltersInGraphHasPropertyPage; if the property page should be available, it could be displayed through the VideoPlayer.FiltersInGraphShowPropertyPage method: in this way you could programmatically give access to specific settings of the filter.

In case you should need to take a screenshot of what it's currently being played on a specific video window, you may use the VideoPlayer.ScreenshotSaveToFile method.

Audio DJ Studio comes with a custom transform filter which allows applying adjustments to video frames when the video source decoder supports the YUY2 color space; supported adjustments are Brightness, Contrast, Saturation, Hue and Gamma Correction. Image adjustment can be enabled, through the VideoPlayer.ImageAdjustEnable method and the value of each of the available adjustments can be retrieved or modified through the VideoPlayer.ImageAdjustPropertyGet and VideoPlayer.ImageAdjustPropertySet methods.

Another feature of the just mentioned custom transform filter is the possibility to display an On-Screen-Display (also known as OSD) which allows rendering a composition of graphical objects (mainly bitmaps and text) over video clips being played. It's important to note that the OSD of the video player (differently from the one available on the VideoMixer object) will be always sized accordingly to the native dimensions of the video clip being played so, when resizing the output window or going full screen, the OSD will be automatically scaled to best fit the area of the output window and the size of contained graphical objects will be scaled as well.

The OSD can be enabled/disabled through the VideoPlayer.OSDEnable method: when the OSD is disabled it doesn't appear on the screen. You can know if the OSD is enabled or disabled through the VideoPlayer.OSDIsEnabled method.

In order to avoid covering the video clips being played, through the VideoPlayer.OSDKeyColorSet method you can define a key color that will be always rendered as totally transparent on the OSD; for example, you could create a bitmap with a black background like the one below:

By setting the key color to "black", the bitmap would be rendered on the video frames as seen on the screenshot below:

Graphical objects (also known as "items") that can be added to compose the OSD are:

| • | Picture files: you can add a picture file through the VideoPlayer.OSDItemPictureFileAdd method and to change the picture through the VideoPlayer.OSDItemPictureFileChange method. |

| • | Picture files stored in memory: you can add a picture file stored inside a memory buffer through the VideoPlayer.OSDItemPictureMemoryAdd method and to change the picture through the VideoPlayer.OSDItemPictureMemoryChange method. |

| • | Bitmaps: you can add a bitmap stored in memory, accessible through its HBITMAP handle, through the VideoPlayer.OSDItemBitmapAdd method and to change the bitmap through the VideoPlayer.OSDItemBitmapChangemethod. |

| • | Strings of text: you can add a string of text through the VideoPlayer.OSDItemTextAdd method and to change the string characters through the VideoPlayer.OSDItemTextChange method. With mentioned methods you can also define both color and font for each single string of text. |

| • | Outlined strings of text: you can add an outlined string of text through the VideoPlayer.OSDItemOutlineTextAdd method and to change the string characters through the VideoPlayer.OSDItemOutlineTextChange method. With mentioned methods you can also define both color and font for each single string of text. |

| • | Rectangles: you can add a rectangle through the VideoPlayer.OSDItemRectangleAdd method and to change its size and color through the VideoPlayer.OSDItemRectangleChange method. |

You have no limit on the number of items you can add to the OSD but, keep in mind, the bigger the number of objects, the higher will be the CPU load if you should animate the OSD contents, for example for scrolling a text item.

After adding a picture item from a file or from a memory buffer or from a HBITMAP handle, there is the possibility to obtain back the handle (HBITMAP) to a memory bitmap, eventually resized to custom dimensions, through the VideoPlayer.OSDItemBitmapGet method.

Each item has the following set of properties:

| • | Position: the VideoPlayer.OSDItemMove and the VideoPlayer.OSDItemScrollByPixels methods can move the position of each item: this allows "animating" the OSD, for example for scrolling the item horizontally or vertically. After every position change, you can know if the item is still visible on the video rendering window through the VideoPlayer.OSDItemIsOnVisibleArea method.The rectangle surrounding the item, with coordinates and size expressed in percentage of the video clip dimensions, used by a specific item can be obtained through the VideoPlayer.OSDItemRectGet method; this is quite useful for items containing a string of text whose total size, in terms of width and height, depends upon the chosen font size. |

| • | Z-Order: the VideoPlayer.OSDItemZOrderSet method determines which item will appear on top of the other. |

| • | Visibility: the VideoPlayer.OSDItemShow method shows/hides the item. |

| • | Transparency: the VideoPlayer.OSDItemAlphaSet allows changing the alpha channel transparency of the item so it will not totally cover what's behind its position. |

Each item can be removed at any time from the list of items through the VideoPlayer.OSDItemRemove method.

If available graphical features of the OSD shouldn't be enough for you, don't forget that you could make a custom graphical rendering inside a memory bitmap through Windows GDI or GDI+ API and then you could display the resulting bitmap, through its HBITMAP handle, in one single shot through the VideoPlayer.OSDItemBitmapAdd method and to update it in real time through the VideoPlayer.OSDItemBitmapChange method.

Examples of use of the VideoPlayer class in Visual C# and Visual Basic.NET can be found inside the following samples installed with the product's setup package:

- AsioVideoPlayer

- VideoMixer

- VideoPlayerSimple

- VideoPlayerAdvanced (contains an example of usage of image adjustments and of the On Screen Display)

- VideoPlayerMultipleOutout

- VideoWaveform