|

How to access settings of audio devices in Windows Vista and later versions |

|

|

How to access settings of audio devices in Windows Vista and later versions |

|

[This tutorial applies to Windows Vista and later versions only]

Starting from Windows Vista, Microsoft has rewritten the multimedia sub-system of the Windows operating system from the ground-up; at the same time Microsoft introduced a new API, also known as Core audio API, which allows interacting with the multimedia sub-system and with audio endpoint devices (sound cards).

The Core Audio APIs implemented in Windows Vista and higher versions are the following:

• Multimedia Device (MMDevice) API. Clients use this API to enumerate the audio endpoint devices in the system.

• DeviceTopology API. Clients use this API to directly access the topological features (for example, volume controls and multiplexers) that lie along the data paths inside hardware devices in audio adapters.

• EndpointVolume API. Clients use this API to directly access the volume controls on audio endpoint devices. This API is primarily used by applications that manage exclusive-mode audio streams.

• Windows Audio Session API (WASAPI). Clients use this API to create and manage audio streams to and from audio endpoint devices.

Audio Dj Studio API for .NET encapsulates a small portion of these APIs inside a set of methods and events which allow communicating with audio endpoint devices and to request information about their status, to obtain/set the volume level, to mute/unmute specific channels, to report values of embedded VU-meters and so on.

All of the mentioned stuffs, with the exception of WASAPI, are accessible through the CoreAudioDevicesMan class accessible through the CoreAudioDevices property. This tutorial doesn't describe management of audio flow for playback and recording through WASAPI: this specific topic is described inside the tutorial How to manage audio flow through WASAPI.

If you open the "Sound" applet available inside the Control Panel of Windows Vista and higher versions, you will see on the "Playback" tab a list of audio rendering devices:

If you click the "Recording" tab you will see a list of audio capture devices:

For both "Playback" and "Recording" devices, respect to versions of Windows older than Vista (XP and earlier versions) devices are not seen as a single sound card having different set of input or output channels but each device is seen as a separate entity which is now called "audio endpoint device".

The new Core audio API distinguishes "Playback" devices from "Recording" devices by discriminating the direction of audio data flow:

| • | "Render audio endpoint devices" are playback devices where audio data flows from the application to the audio endpoint device, which renders the stream.. |

| • | "Capture audio endpoint devices" are recording devices where audio data flows from the audio endpoint device, that captures the stream, to the application.. |

Each audio endpoint device is displayed by the Control Panel with a set of information as seen in the following image:

After selecting a specific audio endpoint device and pressing the "Properties" button, a new set of tabs related to settings of the selected device will be displayed:

If supported by the sound card driver, you could find information about Jacks available on the sound card connector (channels mapping, location, size and color).

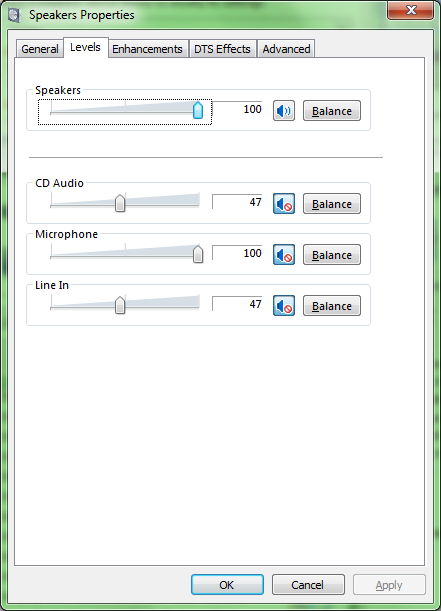

By clicking the "Levels" tab you would have the possibility to set the volume of the end point and also its "mute" state and channels balance:

The sound applet of the Windows control panel can be programmatically displayed through the CoreAudioDevices.DisplaySoundApplet method.

The coverage of Core audio API implemented by our component can be summarized in the following points:

Enumeration and status retrieval of audio endpoint devices

Volume, balance and muting management

VU-Meters of audio endpoint devices

Enumeration and status retrieval of audio endpoint devices

Audio end point devices are enumerated through the CoreAudioDevices.Enum method: this method allows discriminating which devices need to be enumerated depending upon their current status: for example you could decide to omit from enumeration devices which are installed but disabled or devices which are installed and enabled but not plugged to a physical input/output device like the speakers or the microphone: as you may know, starting from Windows Vista sound card drivers can implement the so called "jack sensing" which allows the system to be informed if no physical input/output device is plugged-in.

During the enumeration, the component will internally create two separate lists, one for "Render" devices and one for "Capture" devices; each single element inside each separate list will be accessible through its zero-based index. The number of devices added to each list can be obtained through the CoreAudioDevices.CountGet method. Once you have the total number of devices for each list you can obtain further information through the following methods:

| • | CoreAudioDevices.DescGet allows retrieving the friendly name and description of the device |

| • | CoreAudioDevices.StatusGet allows retrieving the status of the device which may change during the application lifetime; as an alternative, the container application can be informed in real time about changes of the device status through the CallbackForCoreAudioEvents delegate having the nEvent parameter set to EV_COREAUDIO_DEVICE_STATE_CHANGED and the nData3 parameter reporting the current status: this may happen when a USB device is plugged-in or unplugged or when the Microphone is plugged/unplugged from its connector. |

| • | CoreAudioDevices.ChannelCountGet allows retrieving the total number of input or output channels for the device. |

| • | CoreAudioDevices.JackCountGet allows retrieving the total number of jacks available inside the connector of the device |

| • | CoreAudioDevices.TypeGet allows retrieving the type of device |

You can determine the current system default audio endpoint device through the CoreAudioDevices.DefaultGet method; as an alternative, the container application can be notified about changes to the system default device through the CallbackForCoreAudioEvents delegate having the nEvent parameter set to EV_COREAUDIO_DEVICE_DEFAULT_CHANGE. The possibility to programmatically set the system default device is given by the CoreAudioDevices.DefaultSet method.

In case a USB audio device should be added to the system, for example when an USB sound card is installed for the first time, the container application can be informed in real time through the CallbackForCoreAudioEvents delegate having the nEvent parameter set to EV_COREAUDIO_DEVICE_ADDED.

When a device has been added to the system, it may still be reported as "Disabled" due to the jack-sensing feature that keeps a device disabled until a Microphone or the Line-In jacks are not physically plugged into the system; when the audio device should be set as "Enabled" or should be set as "Disabled" the CallbackForCoreAudioEvents delegate having the nEvent parameter set to EV_COREAUDIO_DEVICE_STATE_CHANGED and the nData3 parameter reporting the current status may be invoked a few times.

If the USB audio device should be uninstalled from the system, by physically removing the audio device and by uninstalling its driver from the system, the container application can be informed in real time through the CallbackForCoreAudioEvents delegate having the nEvent parameter set to EV_COREAUDIO_DEVICE_REMOVED.

After catching these events, it would be worth for the container application to perform a new enumeration through a new call to the CoreAudioDevices.Enum method in order to keep updated the lists of render and capture devices.

When the access to audio endpoint devices is no more needed, related resources can be released by calling the CoreAudioDevices.Free method.

Volume, balance and muting management

For each audio endpoint device, the application can retrieve/set the following type of volumes:

| • | Master volume: can be obtained through the CoreAudioDevices.MasterVolumeGet method and modified through the CoreAudioDevices.MasterVolumeSet method. |

| • | Single channels volume: after having determined the number of available channels through the CoreAudioDevices.ChannelCountGet method, the volume of each single available channel can be obtained through the CoreAudioDevices.ChannelVolumeGet method and modified through the CoreAudioDevices.ChannelVolumeSet method. |

| • | Mute status: can be obtained through the CoreAudioDevices.MuteGet method and modified through the CoreAudioDevices.MuteSet method. |

For all of the elements above, the container application can be informed in real time about changes made by the Windows Control Panel or by other applications through the CallbackForCoreAudioEvents delegate having the nEvent parameter set to EV_COREAUDIO_DEVICE_VOLUME_CHANGE.

Volume applied to the various audio endpoint devices can be expressed in percentage linear values or in dB: a value in dB is based upon a logarithmic scale following the rules below:

| • | value -100 mutes the sound |

| • | values higher than -100 and smaller than 0.0 attenuate the sound |

| • | value 0 keeps sound level unchanged |

| • | values higher than 0 amplify the sound |

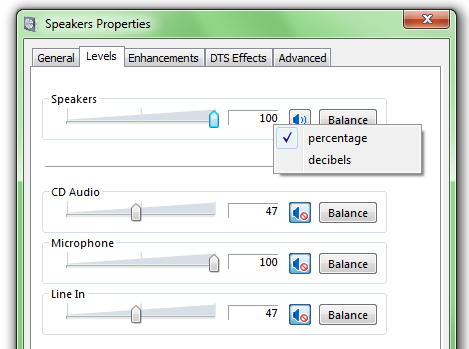

from the "Sound" applet of the control panel you can decide which unit of measure adopt by clicking the edit box of the volume with the right mouse button: after the click the context menu with available units of measure will appear as seen on the screenshot below:

Using volume-related methods mentioned above you can decide the unit of measure of the volume obtained/set into the audio endpoint volume.

IMPORTANT NOTE ABOUT UNITS OF MEASURE:When dealing with values expressed in percentage the effective value can have different meaning depending upon the implementation of the sound card driver: for example, usually a percentage value of 100 is assumed as 0 dB: this is not the case when dealing with Core Audio API because, if the sound card driver allows applying a volume range from -100 dB (total silence) to +20 dB (sound amplification of +20 dB), a percentage value of 100 would mean that the applied volume is +20 dB. The range of volume values accepted by the sound card driver, together with the supported value stepping, can be obtained by calling the CoreAudioDevices.MasterVolumeGet method. |

IMPORTANT NOTE ABOUT CONVERSION BETWEEN UNITS OF MEASURE: When setting a volume expressed in percentage through one of our component's methods, the value displayed inside the "Sound" applet of the Control Panel may report a value slightly different respect to the one effectively set: this issue is due to a rounding problem when converting from percentage to dB (all values are stored in dB inside the driver); if this should be a problem for you, in order to avoid this minor issue you should always use dB as unit of measure when calling our component's methods and to set the "Sound" applet of the Control Panel to display values in dB instead of the percentage default. |

VU-Meters of audio endpoint devices

As we have seen in one of the images above, most audio endpoint devices have a related VU-Meter which allows detecting activities on the audio data flow. It's important to note that these VU-meters will not only report audio activities related to the container application but will report any activity performed by any running application on that specific audio endpoint device. When dealing with capture devices, it must be remarked that the VU-Meter will not act as a sort of monitor but will only report activities of recording/capture sessions performed by the container application or by other applications running inside the system: as an example, you can see VU-meters moving inside the "Recording" tab of the "Sound" applet of the Control Panel because the applet itself starts a capture session.

The container application can obtain peak levels for an audio endpoint device by enabling monitoring of VU-meter activities through the CoreAudioDevices.VuMeterEnableNotifications method. After enabling the monitoring, the container application can be notified each time a new peak value is detected through the CallbackForCoreAudioEvents delegate having the nEvent parameter set to EV_COREAUDIO_DEVICE_VUMETER_CHANGE; at this point peak levels can be obtained for the master channel trough the CoreAudioDevices.VuMeterMasterPeakValueGet method and for each single separate channel of the audio endpoint device trough the CoreAudioDevices.VuMeterChannelPeakValueGet method: as mentioned before, the number of available channels can be determined through the CoreAudioDevices.ChannelCountGet method.

The connector of a sound card has a set of jacks which can be used to connect input devices (for example a microphone) and output devices (for example one or more set of speakers). Modern sound card drivers allow enumerating all of the available jacks and, for each jack, can report a set of information like the location (for example rear or front panel of the PC), size (for example 1/8-inch or 1/4-inch), color (usually speakers are connected to the green jack and the microphone to the cyan jack) and channels mapping (for example, render devices configured for working with a 5.1 channel configuration can report if the jack is used for front speakers or rear speakers or center/subwoofer speakers).

The number of available jacks can be determined through the CoreAudioDevices.JackCountGet method; once the total number of jacks is available, you can obtain information for each single jack through the CoreAudioDevices.JackDescriptorGet method.

A certain number of sound card drivers add the possibility to access subunits, also known as "parts" or "subparts", which allow defining further parameters related to volume settings or to muting settings; usually these subunits are accessible from the "Levels" tab of a specific audio device and can be available for both render and capture devices.

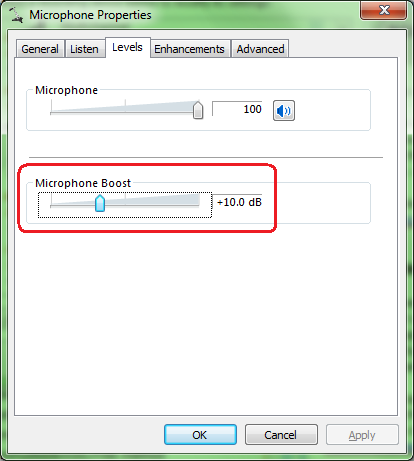

A typical example, for capture devices, is the microphone boost setting which allows applying an amplification of the audio data incoming from the microphone. When available, this setting can be accessed through the Control Panel in the following way:

| • | open the Control Panel |

| • | open the Sound applet |

| • | select the "Recoding" tab |

| • | if available, select the "Microphone" capture device |

| • | press the "Properties" button |

| • | select the "Levels" tab: if the driver implements microphone boost you should see something similar: |

By acting on the slider of the "Microphone Boost" setting, by default set to 0 dB, it will be possible to increase the amplification applied to the incoming audio data: note that there is a maximum range available and that increasing/decreasing of the value in dB is not linear but made up of predefined steps: the image above is for a Microphone whose maximum boost can be set to +30 dB and each increase/decrease step is of 10 dB: in this case the boost value could be only set to the following values: +0 dB, +10 dB, +20 dB and +30 dB.

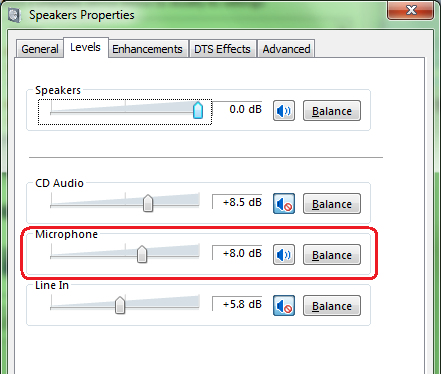

Another example, this time for render devices, is the possibility to set if and how the CD Audio, the Microphone and the Line In channel will work as playback devices. When available, these settings can be accessed through the Control Panel in the following way:

| • | open the Control Panel |

| • | open the Sound applet |

| • | select the "Playback" tab |

| • | select a render device (in this case we will use the "Speakers" device implemented by the "VIA High Definition Audio" driver) |

| • | press the "Properties" button |

| • | select the "Levels" tab: if the driver implements management of parts you should see something similar: |

In the screenshot above we have remarked the "Microphone" part just because what will be said hereafter is related to this specific setting but it could be applied to all of the other available parts. By acting on the "Microphone" slider you can modify the volume of the sound incoming from the microphone and by clicking the small blue speaker's icon you can mute or unmute the channel; the "balance" button allows defining different volume levels for the left and right channel of the microphone.

Our component allows enumerating available subparts and accessing their settings for each render or capture audio endpoint device through the following methods:

| • | CoreAudioDevices.PartsCountGet allows to obtain the number of subparts implemented by the given audio endpoint device |

| • | CoreAudioDevices.PartsNameGet allows to obtain the friendly name of a given subpart |

| • | CoreAudioDevices.PartsChannelsCountGet allows to obtain the number of channels of the subpart |

| • | CoreAudioDevices.PartsMuteGet and CoreAudioDevices.PartsMuteSet allow to obtain and modify the muting status of the subpart |

| • | CoreAudioDevices.PartsVolumeRangeGet allows to obtain the range of acceptable volume values and the related stepping |

| • | CoreAudioDevices.PartsVolumeGet and CoreAudioDevices.PartsVolumeSet allow to obtain and modify the overall volume level of the subpart or of a specific channel |

When one of the mentioned settings is modified through the Sound applet of the Windows Control Panel, the container application can be notified through the CallbackForCoreAudioEvents delegate having the nEvent parameter set to EV_COREAUDIO_DEVICE_PART_CHANGE.

The Windows mixer modifies session volume by providing individual volume controls for each audio application running on the system. Users can adjust the volume for each application through sliders in the Volume Mixer, allowing for separate volume levels for different applications like music players, browsers, or games.

Our component allows the container application to access these volume settings through the following methods:

| • | CoreAudioDevices.SessionMuteGet allows to obtain the muting state of the session. |

| • | CoreAudioDevices.SessionMuteSet allows to modify the muting state of the session. |

| • | CoreAudioDevices.SessionVolumeGet allows to obtain the volume of the session. |

| • | CoreAudioDevices.SessionVolumeSet allows to modify the volume of the session. |

When one of the mentioned settings is modified through the Windows Mixer, the container application can be notified through the CallbackForCoreAudioEvents delegate having thenEvent parameter set to EV_COREAUDIO_SESSION_VOLUME_CHANGE.

A Sample of usage of CoreAudio API in Visual C#.NET and Visual Basic.NET can be found inside the following samples installed with the product's setup package:

- CoreAudioDevices